Associative memories are models that store and recall patterns. Pattern recall (associative recall) is a process whereby an associative memory, upon receiving a potentially corrupted memory query, retrieves the associated value from memory. Pattern storage is a process whereby an associative memory adjusts its parameters such that the new pattern can be recalled at a later date. Jason Yoo, a UBC CS PhD student and PLAI group member, just released a paper on arXiv about a novel neural network associative memory called BayesPCN. BayesPCN, unlike standard neural networks, is able to memorize its training data in one pass by combining predictive coding and locally conjugate Bayesian updates. Notably, this means that BayesPCN does not use backpropagation to “learn” its memory parameters.

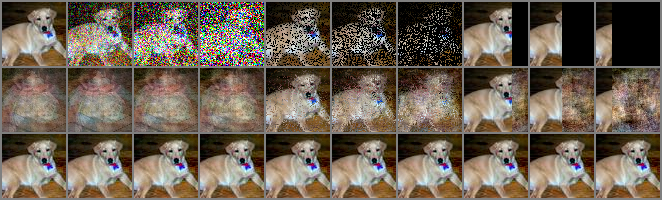

A BayesPCN model sequentially storing the training images (shown on the left) and performing associative recall on the corrupted query images (shown on the right). BayesPCN can view a datapoint just once and encode the information needed to perform associative recall into its neural network synaptic weights. Crucially, this encoding process does not significantly interfere with the previous datapoint encodings, allowing BayesPCN’s to faithfully recall past observations.

Humans and computers make extensive use of associative memories. Have you ever heard a familiar tune at a cafe and played the subsequent tunes inside your head? Or tried to remember your oldest memory from childhood? If so, you have engaged in associative recall. Similarly, a computer’s random-access memory (RAM) accepts address bits as inputs and retrieves the corresponding value bits stored at that address. However, there is a difference between the kind of associative recall carried out by your brain and your computer. RAM’s associative recall is brittle to noise – if even one of the address bits is corrupted, RAM will error or return completely different value bits. On the other hand, human associative recall is robust to noise. Even if a non-negligible percentage of neurons responsible for posing the query to your associative memory wrongfully decide not to fire, your brain, most likely, will still be able to recall the associated value of interest.

Associative memories in machine learning are designed to support noise-tolerant recall and have been used to solve a wide range of problems from sequence processing to pattern detection. In addition, their study has allowed us to build models that replicate aspects of biological intelligence and provided novel perspectives on seemingly unrelated machine learning toolsets like dot-product attention and transformers. A particularly interesting application of associative memories has been pairing them with neural controllers that learn to store and recall hidden layer activations to better perform downstream tasks. Intuitively, this allows a neural controller to better integrate information from the distant past since remembering is reduced to posing a (potentially corrupted) query to its associative memory. This is not unlike what humans do and such approaches have set state-of-the-art scores on several sequence processing tasks.

However, a key requirement of associative memories that can be used in such settings is the ability to perform rapid and incremental pattern storage. It is well known that many offline learned machine learning models suffer from catastrophic forgetting, a phenomenon where old information is significantly overwritten by new information, when they are continually learned without care. Many modern associative memories lack explicit mechanisms that mitigate catastrophic forgetting despite their impressive recall capabilities.

This was what motivated us to design BayesPCN, a novel neural network associative memory that can be stacked arbitrarily deep. BayesPCN can perform one-shot updates of its model parameters to store new observations and is the first parametric associative memory to continually learn hundreds of very high-dimensional observations (> 10,000 dimensions) while maintaining recall performance comparable to the state-of-the-art offline learned associative memories. In addition, BayesPCN supports an explicit forgetting mechanism that gradually erases old information to free its memory. Grounded in the theory of predictive coding from neuroscience, BayesPCN’s inference and learning dynamics are more biologically plausible than the commonly used backpropagation algorithm as all model computations only rely on local information.

We first demonstrate BayesPCN’s ability to one-shot update its model parameters to store new datapoints and correctly recall stored datapoints from severely corrupted memory queries. The following image shows BayesPCN’s recall result of a 12,228 dimensional Tiny ImageNet image.

BayesPCN’s recall result before and after the one-shot write of the top left image into memory. The first row contains example memory queries, the second row contains BayesPCN’s recall outputs before write, and the third row contains BayesPCN’s recall outputs immediately after write. The four left columns illustrate auto-associative recall (no pixels are fixed) and the remaining columns illustrate hetero-associative recall (some pixels are fixed).

We observe that BayesPCN can recover the original image given its variant corrupted with white noise, random pixel blackout, and structured pixel blackout of varying intensities once the image is stored into memory.

We now demonstrate BayesPCN’s ability to continue faithfully recalling datapoints as additional datapoints are sequentially stored into memory. The following GIF details the progression of BayesPCN’s recall result for a particular Tiny ImageNet image as more and more new Tiny ImageNet images are subsequently stored into memory.

BayesPCN’s recall result after storing the top left image into memory. The top row depicts the query images given to BayesPCN as inputs and the bottom row depicts BayesPCN’s recall output for the query image above. The top right caption, “# of subsequent writes”, denotes the number of new Tiny ImageNet images that have been sequentially stored into memory since the storing of the flower image.

We observe that BayesPCN can recover the original image given its severely corrupted versions after hundreds of additional images are incrementally written into memory. This kind of recall ability under the presence of noise that slowly deteriorates over hundreds of additional sequential memory writes has never been observed before.

We now investigate whether BayesPCN can generalize – does BayesPCN get better at reconstructing unseen images from the same data distribution the more datapoint it observes? The GIF below, which details the progression of BayesPCN’s “recall” applied to a particular Tiny ImageNet image that has never been written into memory, suggests that the answer is yes.

BayesPCN’s recall result for an image not stored in memory. The top row depicts the query images given to BayesPCN as inputs and the bottom row depicts BayesPCN’s recall output for the query image above. The top right caption, “# of written datapoints”, denotes the total count of Tiny ImageNet images that have been stored into memory.

While the reconstruction quality is not good, we can clearly see it improving as more images are written into the model. This, along with similar related work, suggests that BayesPCN may be able to do more than associative recall.

Lastly, we visualize the effect of intentional forgetting on BayesPCN’s recall. BayesPCN can intentionally and gradually forget old datapoints to free its memory in order to better store new datapoints. This forgetting mechanism, once applied an infinite number of times, reverts BayesPCN’s parameters to its original state before storing any datapoint. The following media explicitly visualizes that fact.

BayesPCN’s recall result when no datapoint is stored in the model. The top row depicts the query images given to BayesPCN as inputs and the bottom row depicts BayesPCN’s recall output for the query image above. Grey is the color that corresponds to the value of zero.

BayesPCN’s recall result after storing the top left image into memory and applying the ‘forget’ operation to the model multiple times. The top row depicts the query images given to BayesPCN as inputs and the bottom row depicts BayesPCN’s recall output for the query image above. The top right caption, “# of ‘forget’ operations”, denotes the number of ‘forget’ operations (forget strength = 0.01) applied to the model since the storing of the flower image.

The recall outputs rapidly resemble the empty memory’s output as more forget operations are applied. The auto-associative recall results of the four left columns become forgotten faster than the hetero-associative recall results of the remaining columns because the former do not have fixed pixel values that ground the recall result to the query images.

Having demonstrated some results, we briefly explain the intuition behind BayesPCN’s pattern recall and pattern storage. BayesPCN recall, given a query vector, iteratively alternates between finding the hidden layer activations that most likely generated the query vector and finding the query vector that is most likely to be generated by the aforementioned hidden layer activations. BayesPCN learning, given a data vector to store, first finds the hidden layer activations that most likely generated the data vector and updates the neural network parameters via locally conjugate Bayesian update such that the model is more likely to generate the data vector / hidden layer activations pair in the future. The key characteristic of BayesPCN that mitigates catastrophic forgetting is its maintenance of a probabilistic belief over its model parameters unlike most other associative memories.

There are a number of fascinating future research directions for BayesPCN. The first is investigating how to continually store even more datapoints into BayesPCN (thousands and more) without suffering from recall performance decline. Currently, BayesPCN’s recall abruptly deteriorates for all datapoints once “too much” data is stored into memory, where “too much” depends on model hyperparameters but typically ranges from hundreds to early thousands. While forgetting empirically alleviates this issue, further investigation into this behavior would be insightful. The second is applying BayesPCN to tasks that can benefit from robust associative recall, for example sequence processing and continual learning tasks. Lastly, extending BayesPCN to support sequence memorization would be of great interest. If you want to learn more, feel free to check out our paper.